A Monumental Migration to SQL Server 2016 - Part 2

In my previous post, I outlined the preparations we undertook to migrate a large SQL Server 2008R2 instance to SQL Server 2016. This post details migration day.

Final Prep

We completed our nightly backups as usual on Friday night, so when I arrived Saturday I kicked off a final differential backup to catch any overnight changes. We’ve multi-threaded Ola’s backup script by creating multiple jobs and I started them all at once with (of course) PowerShell.

Get-DbaAgentJob -SqlInstance $OldInstance | Where-Object {$_.name -like 'user database backups - diff*'} | ForEach-Object {$_.Start()};

I estimated that the diff backups would take about 90 minutes based on a couple test runs; they took 100 minutes (not too shabby!). While that ran, I re-exported the Agent jobs just to be sure I had everything captured there. I also copied a few databases to another 2008R2 instance in case they were needed for debugging purposes.

The very last step was to extract a listing of all our databases and the full paths to the physical data and log files, then split them into ten files. The method I used to attach the databases wasn’t scalable to running it for all eight thousand databases at once and this let me control the batch sizes easily.

The resulting CSV file gave me each database file, the type of file (data or log), and the database name itself.

| database_id | name | FileType | physical_name |

|---|---|---|---|

| 7 | MyDatabase | ROWS | S:\Very\Long\Path\MyDatabase.mdf |

| 7 | MyDatabase | LOG | S:\Very\Long\Path\MyDatabase_1.ldf |

Time to Move

With all of our pre-migration work complete, we shut down the SQL Server 2008R2 instance. Then we turned things over to the folks in the datacenter to detach the storage LUN, take the snapshot, and attach the LUN to the new instance. When their work was complete, they passed the baton back to me to move the files around and attach the databases.

Attaching the Databases

Before reading this section, I suggest you read two other recent posts, PowerShell Multithreading with PoshRSJob and Thread-safe PowerShell Logging with PSFramework, as they’ll provide the background for how some of this was done.

I created my own function as a wrapper for Mount-DbaDatabase from dbatools, adding the extra features I needed (or thought I needed):

- Logging

- Multi-threading

- Logically renaming the files

- Setting the database owner appropriately

- Rebuilding indexes

- Upgrading the

CompatibilityLevel

In practice, I only used the first four in the initial attachment of the databases for the upgrade. Example execution:

Get-MountProgress is a variation on one of the functions in my multithreading post above, which let me keep tabs on the progress as the function ran. I ran the above code ten (well, eleven) times, once for each “batch” of 10% of the databases. The first group ran great! Only about 6 minutes to attach the databases. The next batch was 10 minutes. Then 16. And then, and then, and then…

Progress just kept getting slower

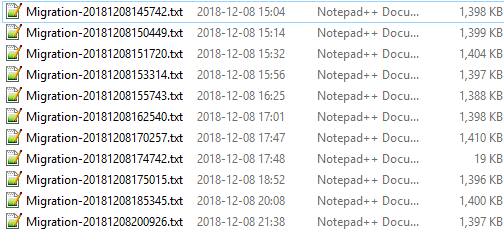

From the timestamps on the log files, you can see that each batch took progressively longer and longer. It was agonizing once we got past the 5th group. I have observed that SMO’s enumeration when connecting to a database instance can be lengthy with large numbers of databases on the instance, which would correlate to what I observed; the more databases I have, the longer it takes. But I can’t completely attribute the slowdown to this.

Partway through, we shifted gears a bit and I ran a special group of databases which hadn’t yet been attached but the QA team needed for their testing. This let them get rolling on their test plans without waiting longer.

Memory consumption for powershell.exe was very high as well, and kept growing with each batch. After the 3rd batch, I decided I needed to exit PowerShell after each one and restart it just to keep that from getting out of hand. I’m not sure what happened there; maybe a runspace memory leak?

I had estimated attaching the databases would take about one hour. It took over six and a half. There was no one more upset over this than me. On the up side, the logs showed nothing but success.

Validation

Once we realized that attaching the databases was going to run longer than expected, our developers and QA team pivoted to testing what they could with the databases we had attached early on. In hindsight, I should have asked them which databases they needed for their test plans and attached those right away, so that they could test while ther remainder of the databases were running. But, great news! They didn’t find any issues that could be directly attributed to how we did the migration of the data.

I re-ran my earlier PowerShell to fetch the databases and their files from sys.databases against the new instance and compared to the original; everything matched! Confirmation that all of the databases were attached.

Final Steps

We missed the estimated time for our go/no-go decision by five minutes. With the number of moving parts, databases in play, unexpected delays, and amount of testing we had to do, that’s pretty good! My colleague and I had some additional work we needed to take care of after the team declared the migration a success. Agent jobs needed to be enabled, overnight job startups monitored, things like that. We called it a day after about 14 hours in the office.

The next day, we had some more tasks to complete. Per a blog post by Erin Stellato (blog|twitter) back in May, because we upgraded from 2008R2 to 2016, an index rebuild was advisable for all our nonclustered indexes. I did this via Red Gate Multi Script and some dynamic SQL instead of PowerShell this time, looping through all the NC indexes in each database and running ALTER INDEX REBUILD.

Aftermath

Our first few days post-upgrade didn’t go well. CPU usage and response times were terrible and after checking over everything for days on end, we finally tracked it back to a piece of code that was still looking for the old SQL Server 2008R2 instance. Once that was fixed, everything came back to normal.

A few days post-migration, we did have one problem caused by a change in SQL Server 2016. It seems that SQL Server got a little stricter about doing subtraction of integers from time types (instead of using dateadd()). Correcting it was pretty easy as it was limited to a couple stored procedures which are used in a limited capacity.

Lessons Learned

It’s not a good project without some solid lessons learned! What could we have done to make migration day easier?

- Work out which databases need to be available for performing post-upgrade checks and attach those first

- Work in smaller batches (not sure how much this would have helped)

- Have a test environment that’s as close as possible to production in all aspects

Conclusion

I suppose you’re expecting me to say something profound here. The most surprising thing to me about this migration is that there were no major surprises. Aside from one portion taking longer than anticipated and those two small pieces of code, everything went to plan.

All in all, our migration is a success and after working out a few glitches in the week or two after, things have been running well. We’re on a modern release now, and looking forward to taking advantage of the new features available to us now.